Alia Bhatt’s Deepfake Video : A Wake-Up Call to the Deepfake Menace

Alia Bhatt’s Deepfake Video : In the age of digital manipulation, where lines between reality and fabrication blur, the emergence of deepfake technology has sent shockwaves through society. Deepfakes, hyper-realistic videos or audio recordings that replace one person’s face or voice with another, have the potential to cause irreparable harm to individuals, spread misinformation, and manipulate public opinion. The recent deepfake video of Indian actress Alia Bhatt, which went viral on social media, serves as a stark reminder of the dangers posed by this technology and the urgent need for safeguards.

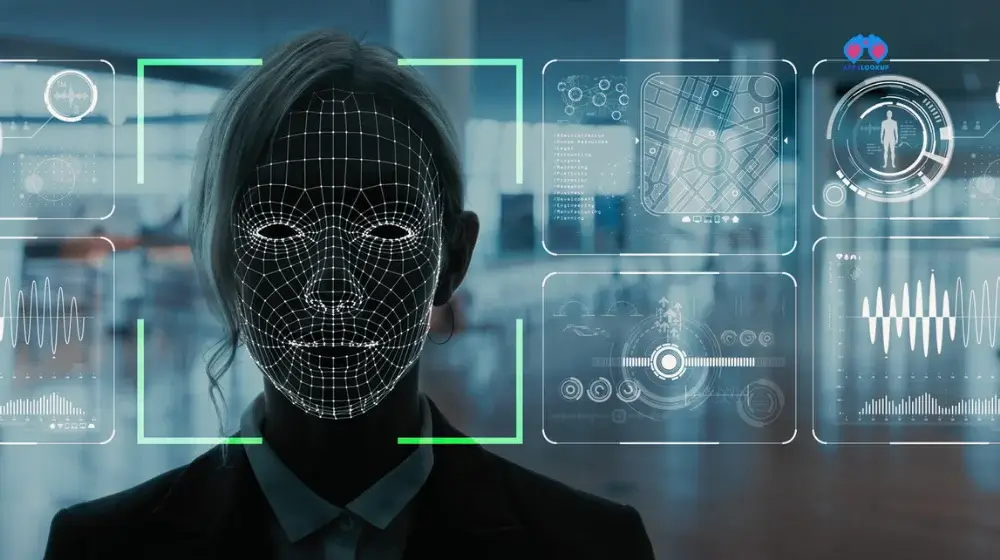

Deepfakes are created using artificial intelligence (AI) and machine learning techniques that can seamlessly blend one person’s likeness onto another. The technology is rapidly advancing, making it increasingly difficult to distinguish between real and fake content. This raises serious concerns about the potential for deepfakes to be used for malicious purposes, such as tarnishing reputations, spreading propaganda, and even influencing elections.

Alia Bhatt’s Deepfake Video: A Catalyst for Addressing Deepfake Risks

The deepfake video of Alia Bhatt, which superimposed her face onto an adult film actress, caused widespread outrage and distress. The actress voiced her concern about the video’s potential to damage her career and reputation, highlighting the profound impact deepfakes can have on individuals. The incident also sparked a much-needed conversation about the ethical implications of deepfake technology and the need for responsible use.

The dangers of deepfakes extend beyond individual harm. They pose a threat to societal trust and integrity, as they can be used to spread misinformation and manipulate public opinion. The potential for deepfakes to be used for political gain or to incite violence is particularly concerning. In an era of information overload and social media echo chambers, deepfakes can easily slip through the cracks and cause significant harm.

Key Points Deepfake Video or Hyper-Realistic videos

- Deepfakes are hyper-realistic videos or audio recordings that replace one person’s face or voice with another using AI and machine learning techniques.

- Deepfakes pose a serious threat to individuals, reputations, and society as a whole.

- The recent deepfake video of Alia Bhatt highlights the potential for deepfakes to cause harm and the need for safeguards.

- Deepfakes can be used to spread misinformation, manipulate public opinion, and even influence elections.

- Addressing the deepfake challenge requires a multi-pronged approach involving technology companies, policymakers, and individuals.

The emergence of deepfake technology demands a collective response from individuals, organizations, and governments. Technology companies must invest in developing tools to detect and prevent deepfakes. Policymakers need to establish clear guidelines and regulations around deepfake creation and distribution. Individuals must become more discerning consumers of online content and be aware of the potential for manipulation.

The deepfake video of Alia Bhatt is a wake-up call to the dangers of this technology and the urgent need for safeguards. By working together, we can mitigate the risks posed by deepfakes and ensure that this powerful technology is used responsibly for the benefit of society.

The Real Threat of Deepfakes

Delving into the Creation of Deepfakes

Deepfakes, the synthetic media that seamlessly blends one person’s likeness onto another, are the product of sophisticated AI and machine learning techniques. These techniques, collectively known as deep learning, enable computers to analyze vast amounts of data and identify patterns, allowing them to mimic human appearance and behavior with remarkable realism.

The creation of a deepfake typically involves two main stages: data collection and model training. In the first stage, deep learning algorithms are fed a large dataset of images or audio recordings of the target person, capturing their facial expressions, body movements, voice patterns, and other distinguishing features. This dataset serves as the foundation for the algorithm to learn the person’s unique characteristics.

The second stage involves training a deep neural network, a complex computer program that mimics the structure of the human brain. The neural network is tasked with analyzing the training data and identifying the underlying patterns that define the target person’s appearance or voice. Once trained, the neural network can generate new images or audio recordings that closely resemble the target person, even in situations where no real-world footage exists.

Unveiling the Spectrum of Deepfakes

Deepfakes encompass a variety of techniques, each with its own unique capabilities and limitations. Some common deepfake techniques include:

- Face Swapping: This technique replaces the face of one person with that of another in a video or image.

- Voice Cloning: This technique synthesizes a person’s voice, allowing them to be made to say things they never said.

- Lip Syncing: This technique manipulates the lip movements of one person in a video to match the audio of another person.

- Full-Body Deepfakes: These complex deepfakes manipulate both the face and body of a person in a video.

Deepfakes have the potential for a wide range of applications, including entertainment, education, and even medical diagnosis. However, the potential for misuse far outweighs these benefits. Deepfakes can be used to spread misinformation, create non-existent events, and damage reputations.

Ethical Concerns and the Potential for Misuse

Deepfakes raise serious ethical concerns, particularly when used for malicious purposes. The ability to manipulate reality with such precision poses a significant threat to trust, transparency, and accountability. Deepfakes can be used to create fake news, spread propaganda, and undermine public trust in institutions.

Moreover, deepfakes can be used to inflict harm on individuals. They can be used to create non-consensual pornography, spread rumors and lies about someone’s character, and even blackmail or extort individuals. The potential for deepfakes to cause emotional distress, damage reputations, and even threaten careers is a serious concern.

The misuse of deepfakes is not merely a hypothetical scenario; it is already happening. Deepfakes have been used to create fake celebrity porn, spread political propaganda, and even implicate innocent people in crimes they did not commit. The potential for deepfakes to cause real harm is undeniable.

Addressing the Ethical Dilemma

Addressing the ethical concerns surrounding deepfakes requires a multi-faceted approach that involves technology companies, policymakers, and individuals. Technology companies must develop tools to detect and prevent deepfakes from being created and distributed. Policymakers need to establish clear guidelines and regulations around deepfake creation and use. Individuals must become more discerning consumers of online content and be aware of the potential for manipulation.

The ethical use of deepfakes requires transparency and accountability. Deepfakes should be clearly labeled as such, and their creators should be identifiable. Moreover, there should be clear legal consequences for those who use deepfakes for malicious purposes.

Deepfakes represent a powerful technology with the potential to both benefit and harm society. The real threat of deepfakes lies in their ability to manipulate reality and inflict harm on individuals and society as a whole. Addressing the ethical concerns surrounding deepfakes requires a collective effort from technology companies, policymakers, and individuals to ensure that this technology is used responsibly and ethically.

Deepfakes Targeting Indian Actresses

In the realm of Indian cinema, deepfakes have emerged as a disturbing trend, targeting prominent actresses and causing significant distress. These deepfake videos, often non-consensually created and distributed, have not only harmed the reputations of the actresses but also raised broader concerns about privacy, consent, and the impact on Indian society.

In 2023, the deepfake video of Alia Bhatt, a celebrated Bollywood actress, sent shockwaves through the industry. The video, which circulated widely on social media, superimposed Bhatt’s face onto an adult film actress, causing her immense distress and threatening her career. The incident highlighted the potential for deepfakes to be used for malicious purposes, causing irreparable harm to individuals.

Similarly, Rashmika Mandanna, another popular South Indian actress, fell victim to a deepfake video that depicted her entering a lift wearing a low-cut top. The video was widely shared and commented on, causing significant embarrassment and emotional distress to Mandanna. This incident further underscored the pervasive nature of deepfakes and their ability to intrude on an individual’s privacy.

The deepfakes targeting Kajol Devgan, a veteran Bollywood actress, and Katrina Kaif, another prominent figure in the industry, further exacerbated the issue. These deepfakes, often depicting the actresses in compromising situations, served as stark reminders of the vulnerability of women in the digital age.

The motivations behind these deepfakes are often complex and multifaceted. In some cases, the creators may be driven by a desire for personal gain, seeking attention or notoriety through the creation and distribution of these harmful videos. Others may be motivated by revenge, seeking to damage the reputations of the actresses or inflict emotional pain.

The impact of these deepfakes on the actresses has been profound. The actresses have experienced emotional distress, reputational damage, and fear for their safety. The constant threat of having their likeness manipulated and misused has caused significant anxiety and stress, affecting their personal and professional lives.

- Bard AI: A Powerful Tool for Creativity and Communication

- AI Will Change the Future of Work : Here’s How

- AppsLookup: Your Ultimate Destination for App Discovery

- 9 Best AI Reel Makers for Creating Engaging Instagram Videos

- How to Use ChatGPT for SEO : What NOT To Do -❌

Beyond the impact on individual actresses, these deepfakes have broader implications for Indian society. They contribute to a culture of cyberbullying and harassment, particularly targeting women. They also erode trust in online content, making it increasingly difficult to discern between genuine and manipulated media.

Addressing the deepfake menace requires a multi-pronged approach. Technology companies need to develop effective tools for detecting and removing deepfakes. Law enforcement agencies need to take a proactive stance in identifying and prosecuting the creators of these harmful videos. Social media platforms need to implement stricter guidelines for content moderation.

Moreover, individuals need to become more aware of the threat posed by deepfakes and exercise caution when consuming online content. They should be critical of the information they encounter and avoid sharing potentially harmful videos.

The deepfakes targeting Indian actresses serve as a stark reminder of the dangers posed by this technology. The potential for deepfakes to cause harm, particularly to women, is undeniable. Addressing this issue requires a concerted effort from technology companies, law enforcement, social media platforms, and individuals to ensure that deepfakes are not used to inflict harm and violate privacy.

Safeguarding Against Deepfakes

The emergence of deepfakes has created a pressing need for effective safeguards to mitigate the risks posed by this technology. Detecting and preventing deepfakes presents significant challenges, as the technology continues to evolve and become more sophisticated. However, a multi-faceted approach involving technology companies, policymakers, and individuals can help protect against the harmful impact of deepfakes.

Challenges in Detecting and Preventing Deepfakes

Detecting deepfakes is a complex task due to the inherent difficulty in distinguishing between real and manipulated media. Deepfake creators are constantly refining their techniques, making it increasingly challenging for detection algorithms to keep pace. The human eye is often unable to spot subtle inconsistencies in deepfakes, especially in low-resolution or fast-paced videos.

Moreover, preventing the creation and distribution of deepfakes is a formidable challenge. The decentralized nature of the internet and the ease with which deepfake creation tools can be accessed make it difficult to control the flow of this harmful content. Social media platforms, where deepfakes often spread rapidly, face the daunting task of moderating vast amounts of user-generated content.

The Role of Technology Companies in Combating Deepfakes

Technology companies play a crucial role in combating deepfakes by developing and deploying effective detection and prevention tools. Artificial intelligence and machine learning techniques offer promising approaches for identifying deepfakes. Algorithms can analyze patterns in audio and video data to detect subtle inconsistencies that may indicate manipulation.

In addition to detection tools, technology companies can develop tools that can help prevent the creation of deepfakes in the first place. This may involve watermarking media with unique identifiers or developing techniques to trace the origin of deepfakes back to their creators.

Moreover, technology companies can work with content creators and distributors to establish clear guidelines and best practices for using deepfakes responsibly. This includes clearly labeling deepfakes as such and obtaining consent from individuals before using their likeness in manipulated media.

Policy and Legal Solutions to Address the Deepfake Threat

Governments and policymakers can play a significant role in addressing the deepfake threat by establishing clear regulations and legal frameworks. Laws can be enacted to criminalize the creation and distribution of harmful deepfakes, particularly those that target individuals or spread misinformation.

Policymakers can also work with technology companies to develop industry-wide standards for deepfake detection and prevention. This could involve creating standardized datasets for training detection algorithms and establishing protocols for data sharing between companies.

In addition, governments can invest in research and development to advance deepfake detection technologies and explore innovative approaches to combating this threat. This could involve funding academic research, supporting open-source projects, and collaborating with technology companies to develop cutting-edge solutions.

Individual Awareness and Responsibility

Individuals also have a critical role to play in safeguarding against deepfakes. By becoming more aware of the potential for manipulation, individuals can make informed decisions about the content they consume and share online. This includes being critical of the information they encounter, verifying sources, and avoiding sharing potentially harmful content.

Moreover, individuals can protect themselves from deepfakes by maintaining strong online privacy practices. This includes using strong passwords, enabling two-factor authentication, and being cautious about sharing personal information online.

Safeguarding against deepfakes requires a collective effort from technology companies, policymakers, and individuals. By working together, we can establish effective detection and prevention mechanisms, develop clear regulations, and raise individual awareness to mitigate the risks posed by this technology.

The Future of Deepfakes

Potential Benefits of Deepfakes

While deepfakes pose significant risks, they also hold the potential for positive applications if used responsibly. Deepfakes could be used to:

- Enhance accessibility: Deepfakes could be used to create subtitles or translations for audio and video content, making it more accessible to people who are hearing impaired or who speak different languages.

- Educate and entertain: Deepfakes could be used to create educational videos or simulations that are more engaging and interactive for students. They could also be used to create immersive entertainment experiences.

- Preserve history: Deepfakes could be used to restore or recreate historical footage, bringing the past to life in a more vivid way.

The Future of Deepfake Technology

Deepfake technology is rapidly evolving, and it is likely to become even more sophisticated in the future. This will make it increasingly difficult to distinguish between real and manipulated media, which could have profound implications for society.

One of the most concerning aspects of the future of deepfakes is the potential for them to be used to create hyper-realistic propaganda or to manipulate public opinion. Deepfakes could be used to make it appear as if a politician or celebrity has said or done something they never did, potentially influencing elections or spreading misinformation.

To address the potential harms of deepfakes, it is crucial for individuals, organizations, and governments to work together. Here are some specific actions that can be taken:

Individuals:

- Be critical of the information you consume online. Don’t believe everything you see or hear, and take the time to verify sources.

- Be cautious about sharing content online. Before sharing something, consider whether it is genuine or could be a deepfake.

- Report deepfakes that you come across. Most social media platforms have reporting mechanisms for deepfakes.

Organizations:

- Develop and deploy effective deepfake detection tools. Technology companies can invest in research and development to create tools that can accurately identify deepfakes.

- Establish clear guidelines for the use of deepfakes. Media organizations can develop guidelines for their journalists and content creators on the responsible use of deepfakes.

- Educate the public about deepfakes. Schools and other organizations can provide education about the risks and potential harms of deepfakes.

Governments:

- Enact laws against harmful deepfakes. Governments can make it illegal to create or distribute deepfakes that are intended to cause harm or spread misinformation.

- Support research into deepfake detection and prevention. Governments can fund research into developing new technologies to combat deepfakes.

- Collaborate with other countries to address the global deepfake threat. Governments can work together to share information and develop international standards for deepfake regulation.

By taking these actions, we can work towards a future where deepfakes are used responsibly and ethically, and where the public can trust the information they consume online.

A Collective Call to Address the Deepfake Challenge

The emergence of deepfake technology has ushered in an era of unprecedented digital manipulation, blurring the lines between reality and fabrication. This powerful technology, while holding the potential for positive applications, poses a significant threat to individuals, communities, and society as a whole. The ability to seamlessly blend one person’s likeness onto another, creating hyper-realistic videos or audio recordings, raises serious concerns about privacy, misinformation, and the manipulation of public opinion.

The deepfake videos targeting Alia Bhatt, Rashmika Mandanna, Kajol, and Katrina Kaif serve as stark reminders of the potential harm deepfakes can inflict. These incidents have caused emotional distress, reputational damage, and fear for the safety of the actresses, highlighting the vulnerability of individuals in the digital age. The broader implications of these deepfakes extend to the erosion of trust in online content and the potential for societal manipulation.

Addressing the deepfake challenge requires a collective effort from individuals, organizations, and governments. Technology companies must invest in developing effective detection and prevention tools, while policymakers need to establish clear guidelines and regulations around deepfake creation and distribution. Individuals, as consumers of online content, must exercise caution and become more discerning in identifying and avoiding harmful deepfakes.

The importance of individual awareness and responsibility cannot be overstated. By being critical of the information we consume online, verifying sources, and avoiding the sharing of potentially harmful content, we can contribute to a safer digital environment. Education and awareness campaigns should play a crucial role in empowering individuals to recognize and combat deepfakes.

The deepfake challenge is not merely a technological issue; it is a societal one. It demands a collective response that encompasses technological advancements, regulatory frameworks, and individual vigilance. By working together, we can harness the potential of deepfakes while mitigating their risks, ensuring that this technology serves for the benefit, not harm, of society.

WikiPedia – Deepfake